|

I’m excited to announce that AWS CodeBuild now supports parallel test execution, so you can run your test suites concurrently and reduce build times significantly.

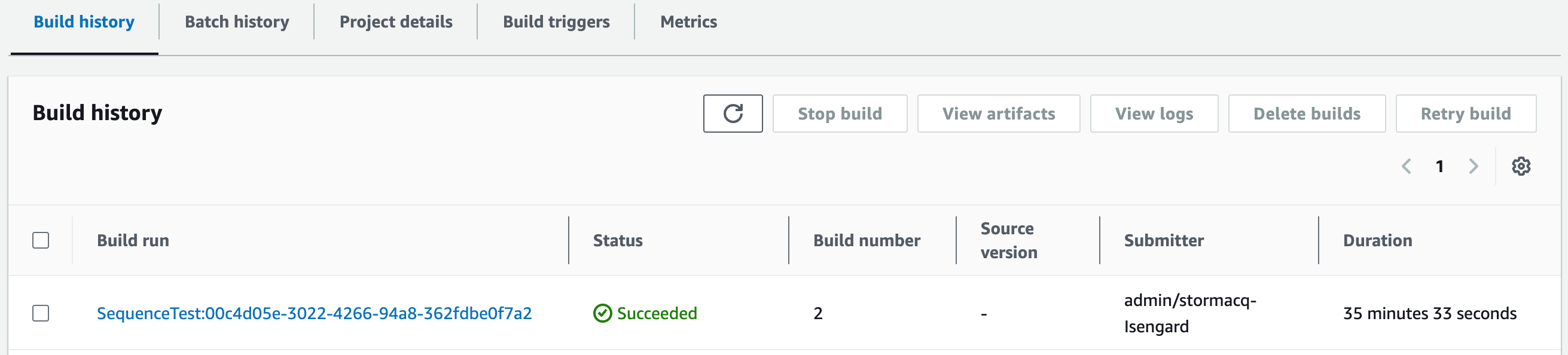

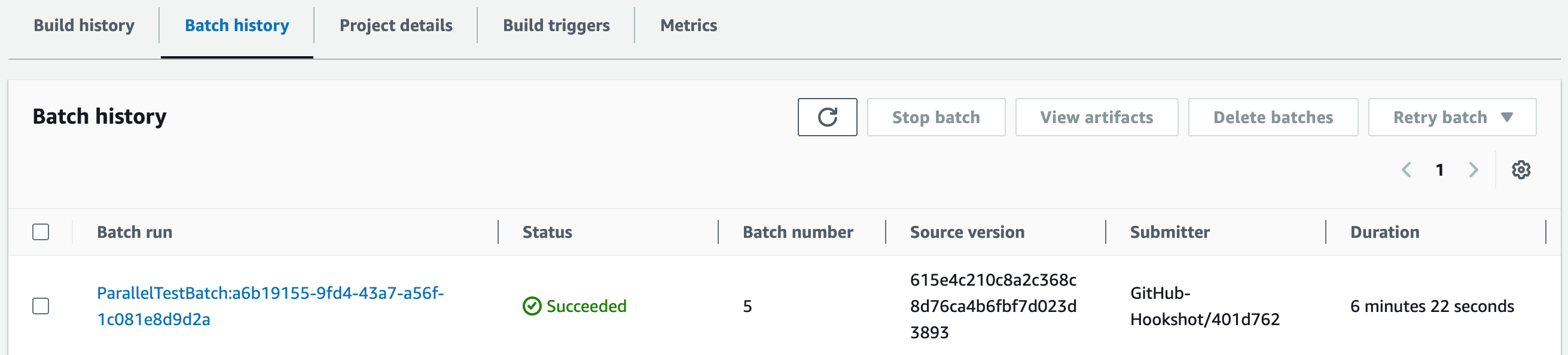

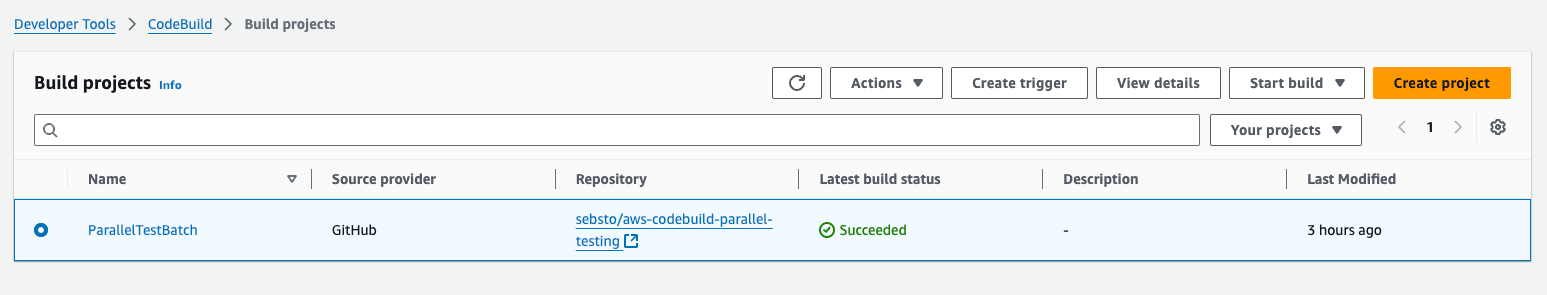

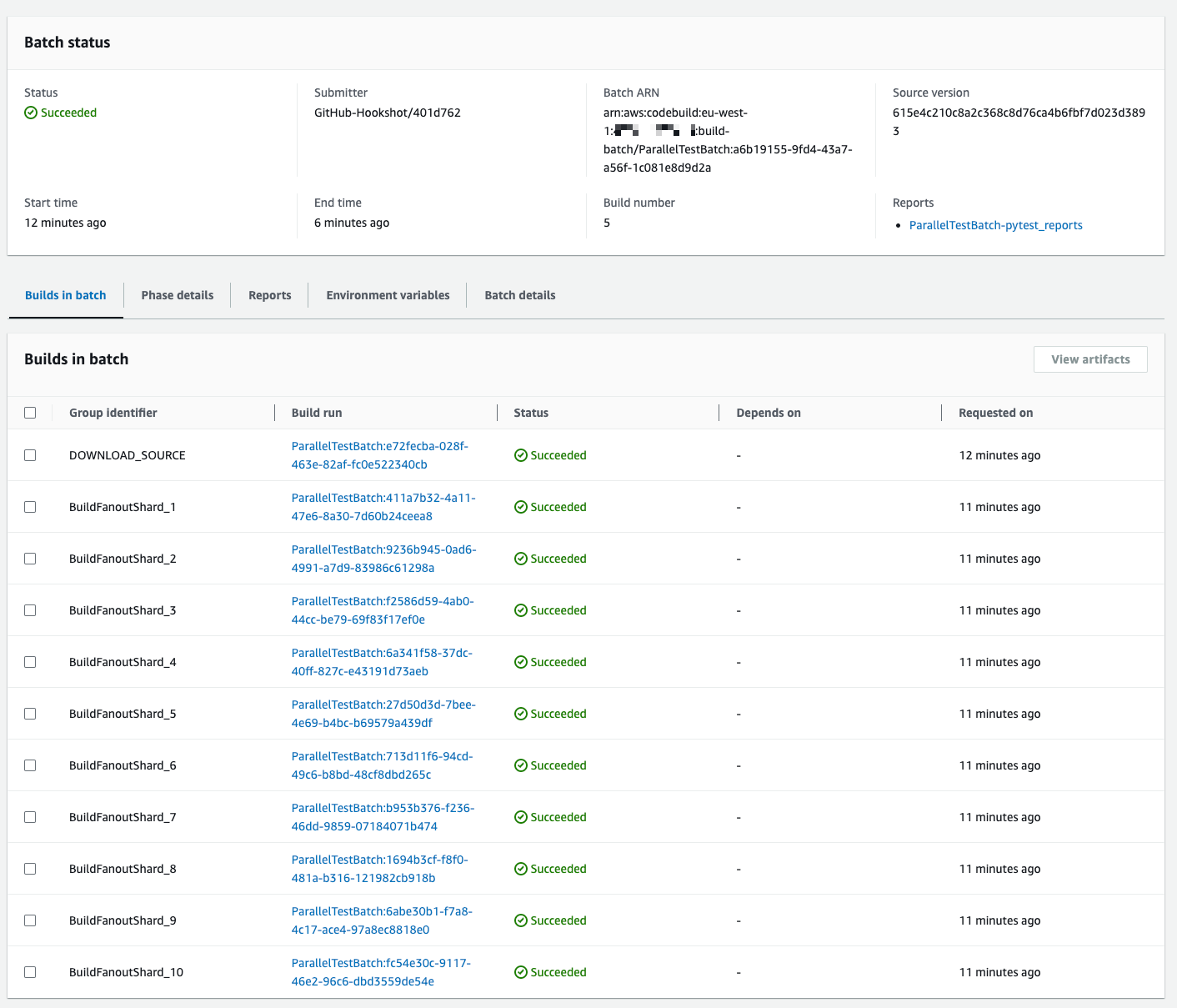

With the demo project I wrote for this post, the total test time went down from 35 minutes to 6 minutes, including the time to provision the environments. These two screenshots from the AWS Management Console show the difference.

Sequential execution of the test suite

Parallel execution of the test suite

Very long test times pose a significant challenge when running continuous integration (CI) at scale. As projects grow in complexity and team size, the time required to execute comprehensive test suites can increase dramatically, leading to extended pipeline execution times. This not only delays the delivery of new features and bug fixes, but also hampers developer productivity by forcing them to wait for build results before proceeding with their tasks. I have experienced pipelines that took up to 60 minutes to run, only to fail at the last step, requiring a complete rerun and further delays. These lengthy cycles can erode developer trust in the CI process, contribute to frustration, and ultimately slow down the entire software delivery cycle. Moreover, long-running tests can lead to resource contention, increased costs because of wasted computing power, and reduced overall efficiency of the development process.

With parallel test execution in CodeBuild, you can now run your tests concurrently across multiple build compute environments. This feature implements a sharding approach where each build node independently executes a subset of your test suite. CodeBuild provides environment variables that identify the current node number and the total number of nodes, which are used to determine which tests each node should run. There is no control build node or coordination between nodes at build time—each node operates independently to execute its assigned portion of your tests.

To enable test splitting, configure the batch fanout section in your buildspec.xml, specifying the desired parallelism level and other relevant parameters. Additionally, use the codebuild-tests-run utility in your build step, along with the appropriate test commands and the chosen splitting method.

The tests are split based on the sharding strategy you specify. codebuild-tests-run offers two sharding strategies:

- Equal-distribution. This strategy sorts test files alphabetically and distributes them in chunks equally across parallel test environments. Changes in the names or quantity of test files might reassign files across shards.

- Stability. This strategy fixes the distribution of tests across shards by using a consistent hashing algorithm. It maintains existing file-to-shard assignments when new files are added or removed.

CodeBuild supports automatic merging of test reports when running tests in parallel. With automatic test report merging, CodeBuild consolidates tests reports into a single test summary, simplifying result analysis. The merged report includes aggregated pass/fail statuses, test durations, and failure details, reducing the need for manual report processing. You can view the merged results in the CodeBuild console, retrieve them using the AWS Command Line Interface (AWS CLI), or integrate them with other reporting tools to streamline test analysis.

Let’s look at how it works

Let me demonstrate how to implement parallel testing in a project. For this demo, I created a very basic Python project with hundreds of tests. To speed things up, I asked Amazon Q Developer on the command line to create a project and 1,800 test cases. Each test case is in a separate file and takes one second to complete. Running all tests in a sequence requires 30 minutes, excluding the time to provision the environment.

In this demo, I run the test suite on ten compute environments in parallel and measure how long it takes to run the suite.

To do so, I added a buildspec.yml file to my project.

version: 0.2

batch:

fast-fail: false

build-fanout:

parallelism: 10 # ten runtime environments

ignore-failure: false

phases:

install:

commands:

- echo 'Installing Python dependencies'

- dnf install -y python3 python3-pip

- pip3 install --upgrade pip

- pip3 install pytest

build:

commands:

- echo 'Running Python Tests'

- |

codebuild-tests-run

--test-command 'python -m pytest --junitxml=report/test_report.xml'

--files-search "codebuild-glob-search 'tests/test_*.py'"

--sharding-strategy 'equal-distribution'

post_build:

commands:

- echo "Test execution completed"

reports:

pytest_reports:

files:

- "*.xml"

base-directory: "report"

file-format: JUNITXML There are three parts to highlight in the YAML file.

First, there’s a build-fanout section under batch. The parallelism command tells CodeBuild how many test environments to run in parallel. The ignore-failure command indicates if failure in any of the fanout build tasks can be ignored.

Second, I use the pre-installed codebuild-tests-run command to run my tests.

This command receives the complete list of test files and decides which of the tests must be run on the current node.

- Use the

sharding-strategyargument to choose between equally distributed or stable distribution, as I explained earlier. - Use the

files-searchargument to pass all the files that are candidates for a run. We recommend to use the providedcodebuild-glob-searchcommand for performance reasons, but any file search tool, such as find(1), will work. - I pass the actual test command to run on the shard with the

test-commandargument.

Lastly, the reports section instructs CodeBuild to collect and merge the test reports on each node.

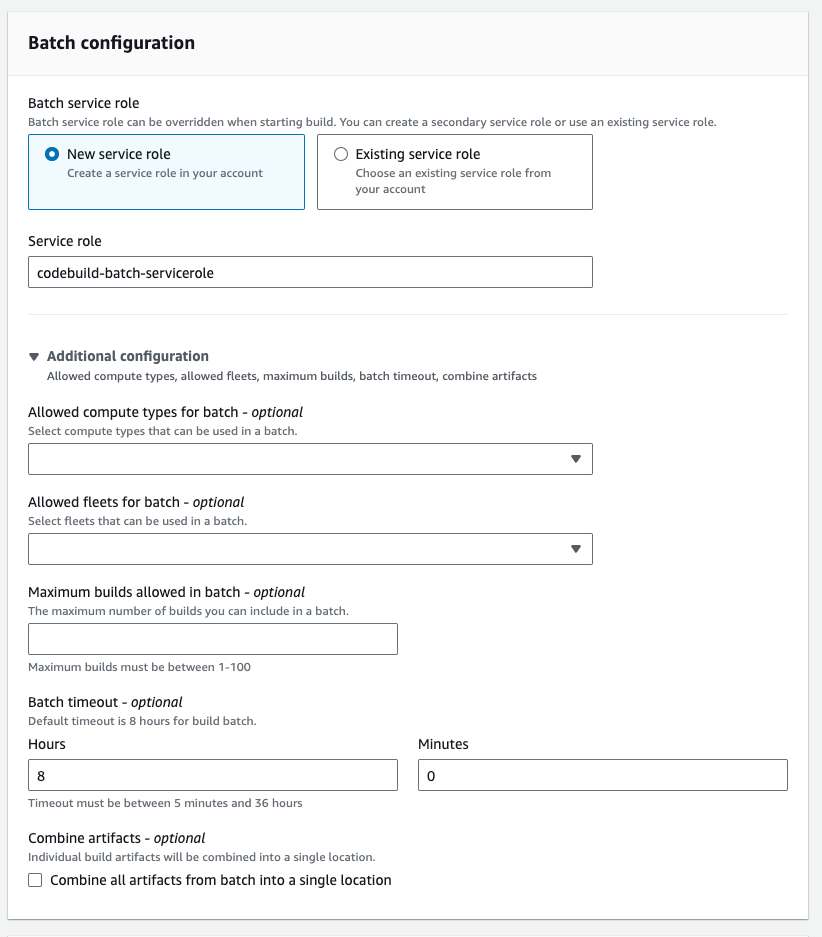

Then, I open the CodeBuild console to create a project and a batch build configuration for this project. There’s nothing new here, so I’ll spare you the details. The documentation has all the details to get you started. Parallel testing works on batch builds. Make sure to configure your project to run in batch.

Now, I’m ready to trigger an execution of the test suite. I can commit new code on my GitHub repository or trigger the build in the console.

After a few minutes, I see a status report of the different steps of the build; with a status for each test environment or shard.

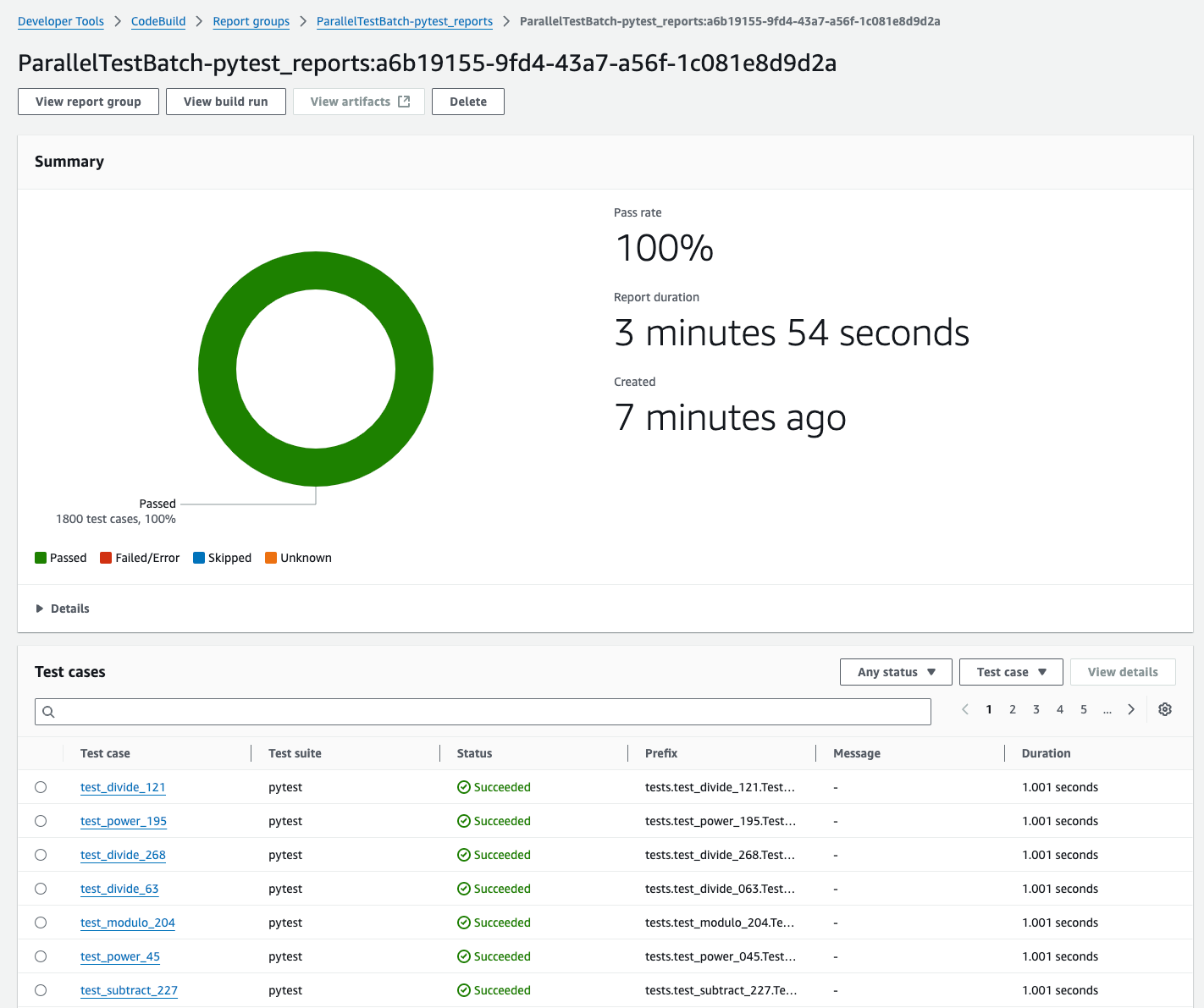

When the test is complete, I select the Reports tab to access the merged test reports.

The Reports section aggregates all test data from all shards and keeps the history for all builds. I select my most recent build in the Report history section to access the detailed report.

As expected, I can see the aggregated and the individual status for each of my 1,800 test cases. In this demo, they’re all passing, and the report is green.

The 1,800 tests of the demo project take one second each to complete. When I run this test suite sequentially, it took 35 minutes to complete. When I run the test suite in parallel on ten compute environments, it took 6 minutes to complete, including the time to provision the environments. The parallel run took 17.9 percent of the time of the sequential run. Actual numbers will vary with your projects.

Additional things to know

This new capability is compatible with all testing frameworks. The documentation includes examples for Django, Elixir, Go, Java (Maven), Javascript (Jest), Kotlin, PHPUnit, Pytest, Ruby (Cucumber), and Ruby (RSpec).

For test frameworks that don’t accept space-separated lists, the codebuild-tests-run CLI provides a flexible alternative through the CODEBUILD_CURRENT_SHARD_FILES environment variable. This variable contains a newline-separated list of test file paths for the current build shard. You can use it to adapt to different test framework requirements and format test file names.

You can further customize how tests are split across environments by writing your own sharding script and using the CODEBUILD_BATCH_BUILD_IDENTIFIER environment variable, which is automatically set in each build. You can use this technique to implement framework-specific parallelization or optimization.

Pricing and availability

With parallel test execution, you can now complete your test suites in a fraction of the time previously required, accelerating your development cycle and improving your team’s productivity.

Parallel test execution is available on all three compute modes offered by CodeBuild: on-demand, reserved capacity, and AWS Lambda compute.

This capability is available today in all AWS Regions where CodeBuild is offered, with no additional cost beyond the standard CodeBuild pricing for the compute resources used.

I invite you to try parallel test execution in CodeBuild today. Visit the AWS CodeBuild documentation to learn more and get started with parallelizing your tests.

— seb

PS: Here’s the prompt I used to create the demo application and its test suite: “I’m writing a blog post to announce codebuild parallel testing. Write a very simple python app that has hundreds of tests, each test in a separate test file. Each test takes one second to complete.”

How is the News Blog doing? Take this 1 minute survey!

(This survey is hosted by an external company. AWS handles your information as described in the AWS Privacy Notice. AWS will own the data gathered via this survey and will not share the information collected with survey respondents.)